Problem

Financial news sentiment analysis is challenging due to domain-specific language and class imbalance. The goal was to fine-tune a BERT model to accurately classify financial headlines as positive, negative, or neutral sentiment, with particular focus on improving performance on the negative class which was initially underperforming.

Data

Fine-tuned BERT model (110M parameters) on 5,842 financial news headlines labeled as positive, negative, or neutral. The dataset required careful preprocessing to handle financial terminology and domain-specific language patterns.

Approach

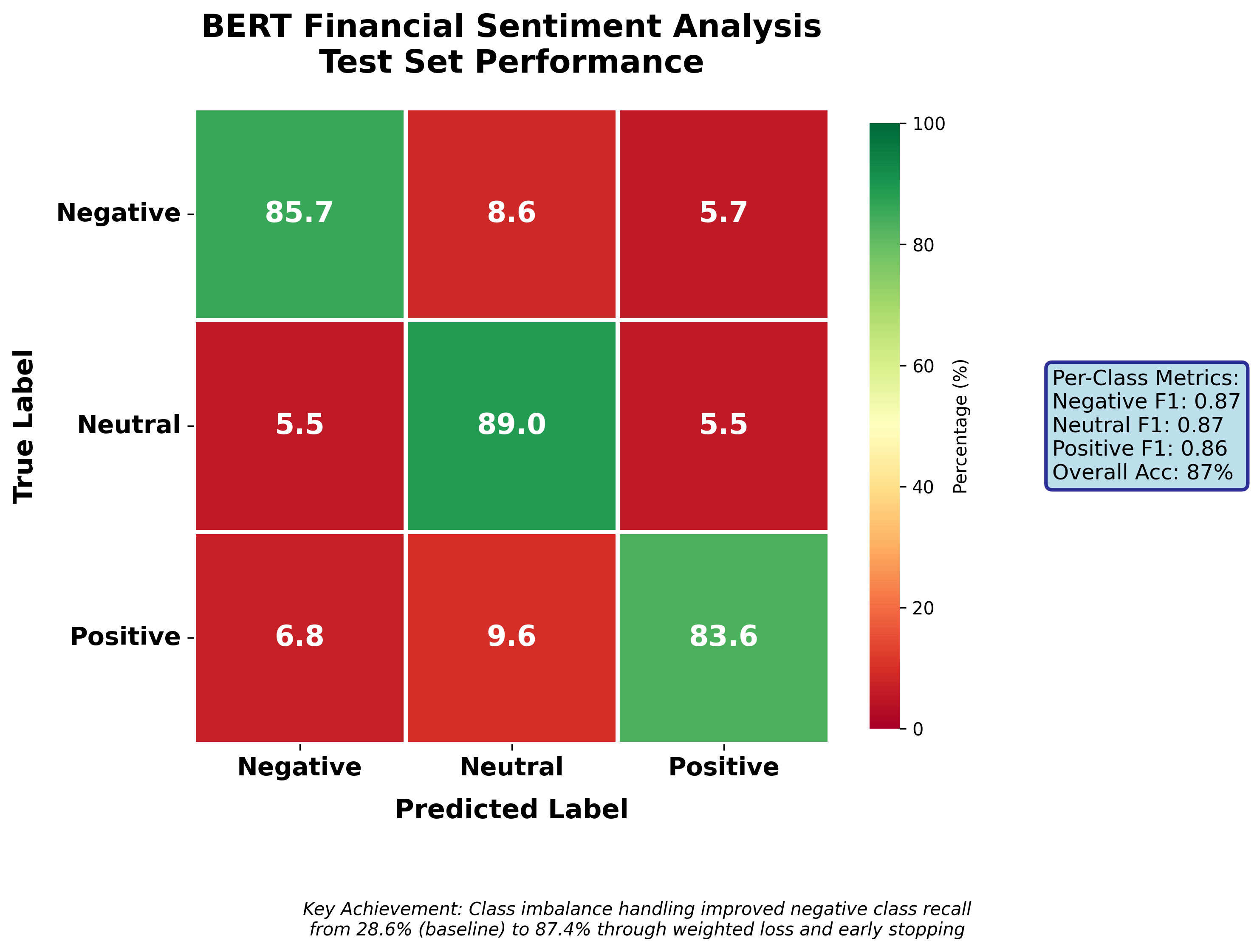

Implemented weighted loss functions and early stopping to address class imbalance. The model fine-tuning process focused on improving negative class recall, which initially performed at 28.6%.

- Model fine-tuning and training pipeline

- Data preprocessing and evaluation metrics

- Class imbalance handling with weighted loss

Evaluation

Achieved 87% test accuracy with balanced F1 scores across all sentiment classes. Most notably, improved negative class recall from 28.6% to 85.7%, demonstrating strong performance on the previously underperforming class.

Results

The fine-tuned model successfully classifies financial news sentiment with strong performance across all classes, particularly in identifying negative sentiment which is critical for financial applications. The weighted loss approach effectively addressed the class imbalance issue.